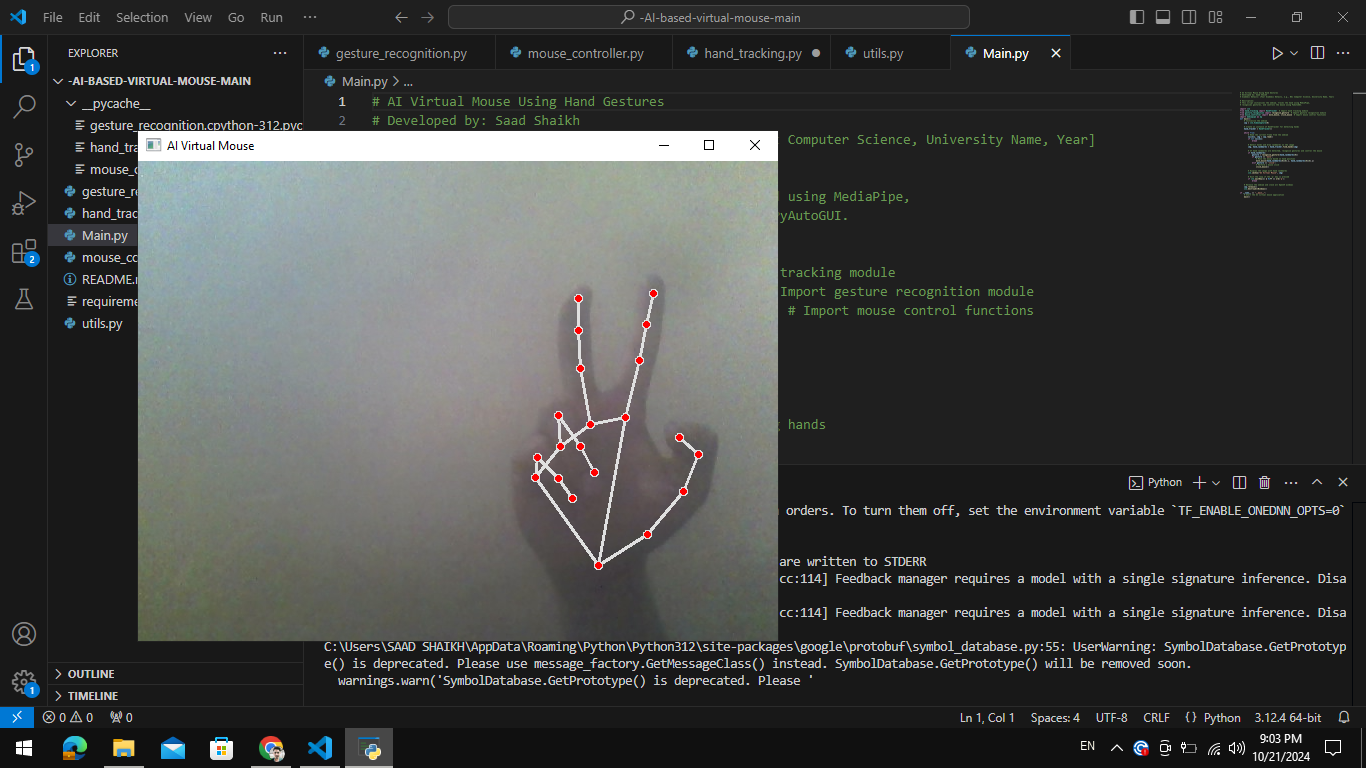

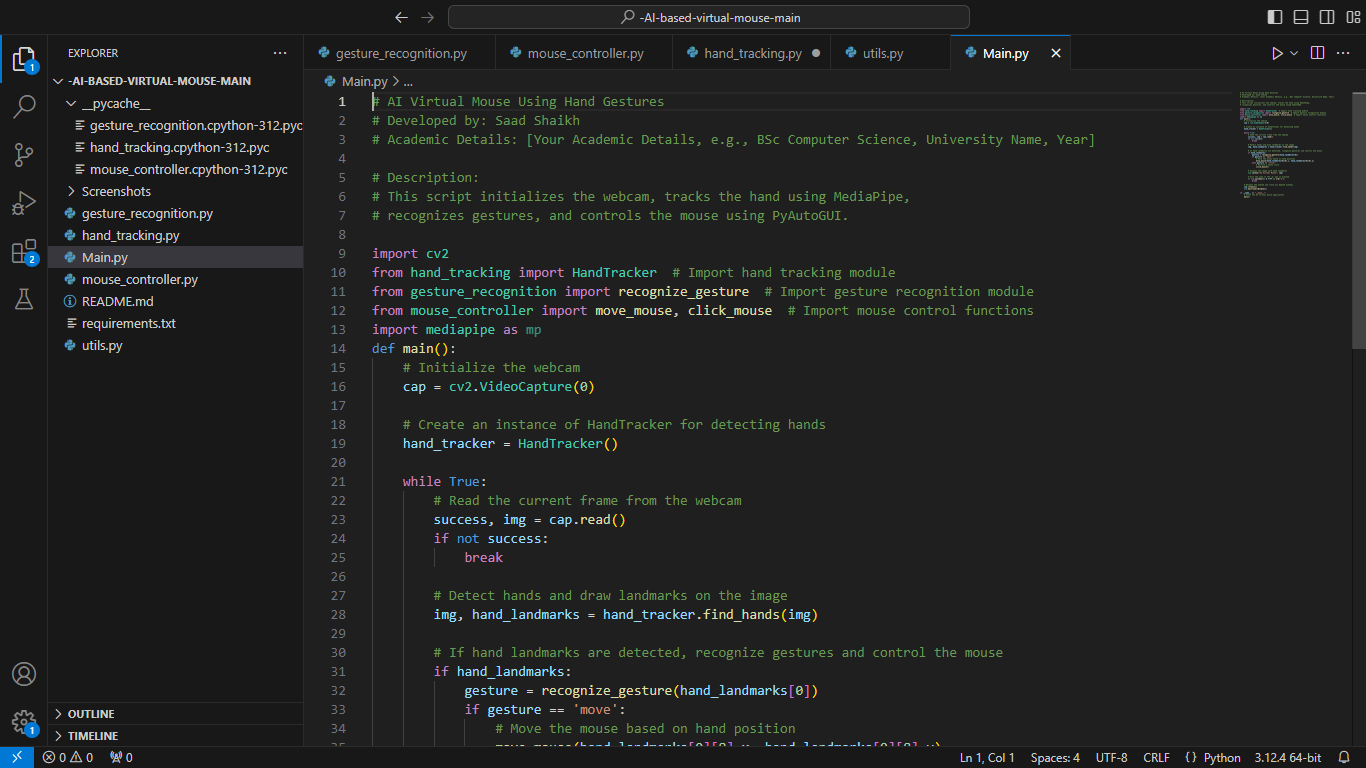

This project creates a virtual mouse that can be controlled using hand gestures detected by a webcam. The AI Virtual Mouse utilizes computer vision techniques for hand tracking and gesture recognition to control mouse movements and perform actions such as clicks. It provides a hands-free interface for controlling the mouse using a camera.

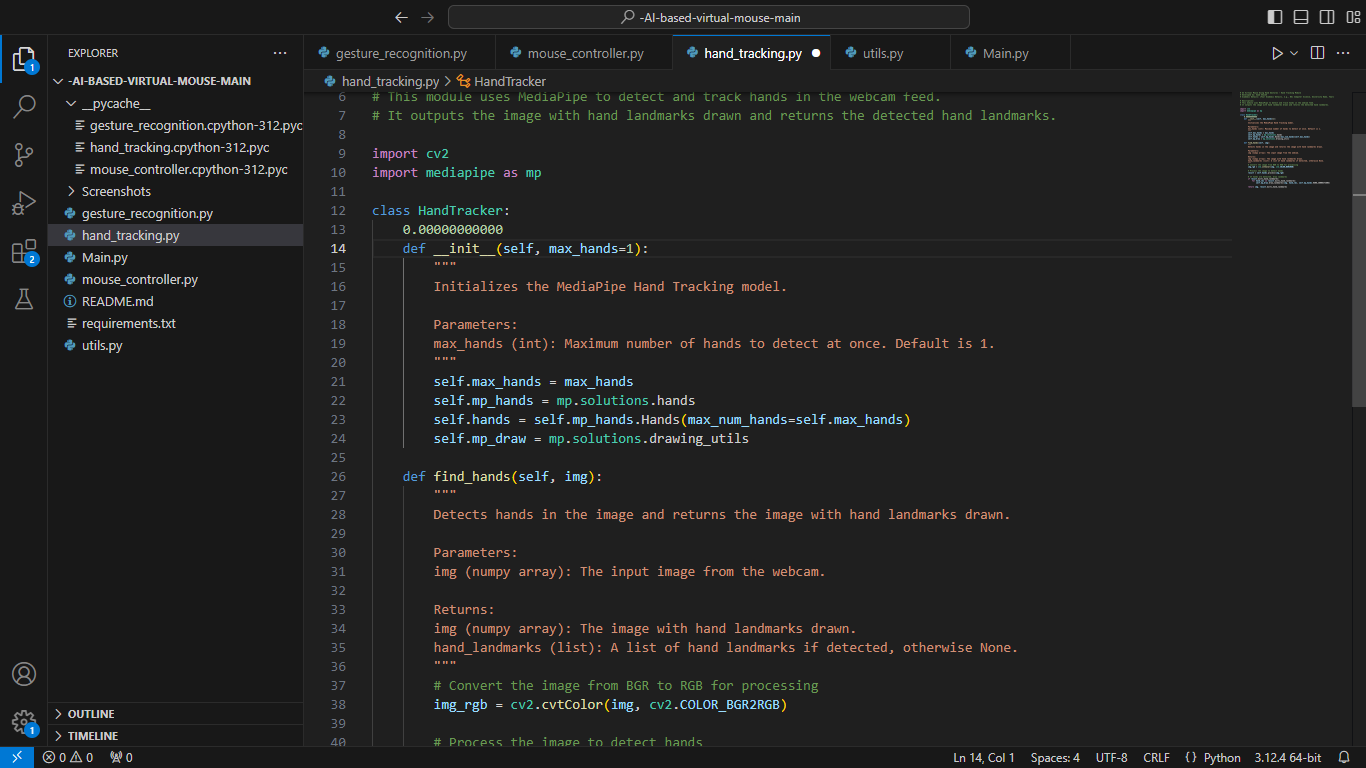

- Hand tracking using MediaPipe.

- Gesture recognition for mouse movements and actions.

- Real-time mouse control using hand gestures like:

- Moving the mouse pointer.

- Left click with a pinching gesture.

- Mouse scroll with specific gestures.

- OpenCV: For real-time video capture and image processing.

- MediaPipe: For hand detection and tracking.

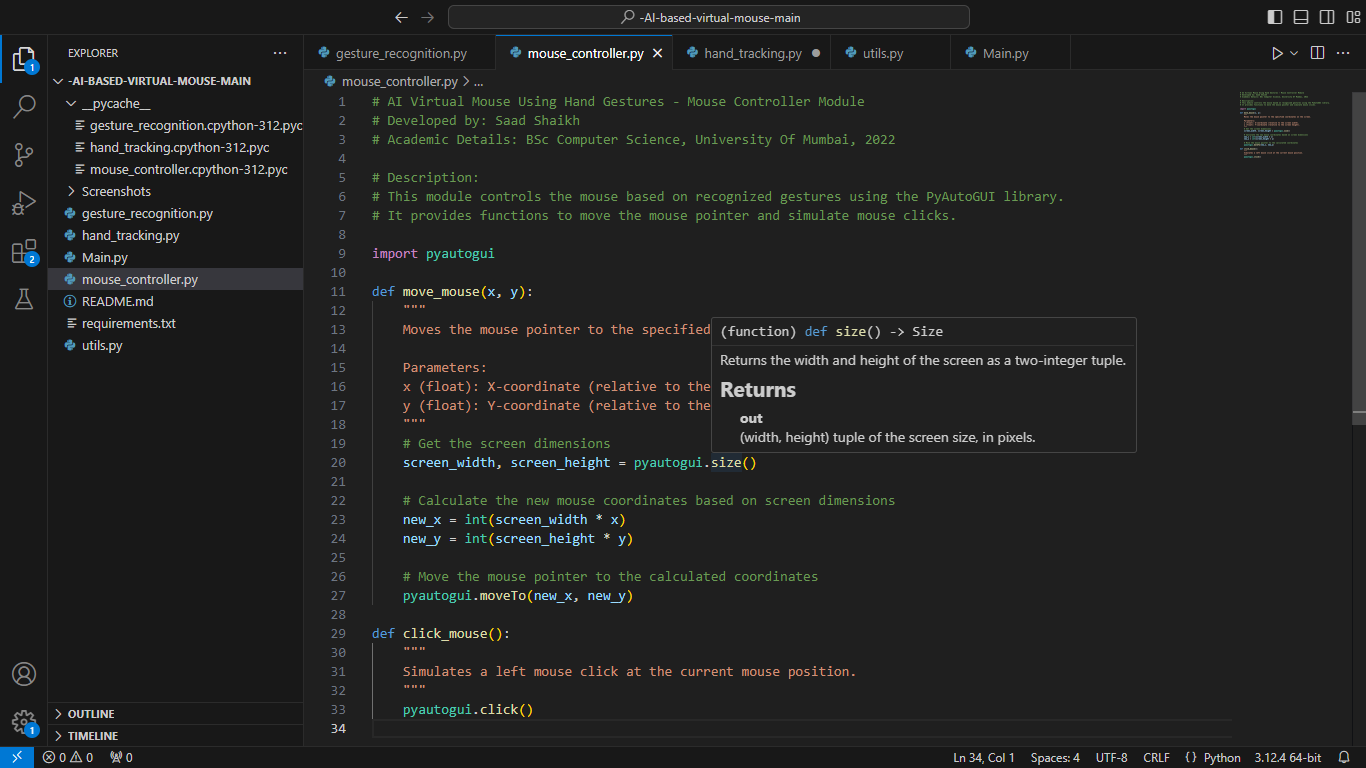

- PyAutoGUI: To simulate mouse actions like movement and clicks.

- Numpy: For numerical operations and processing coordinates.

- Hand Tracking: The webcam captures frames which are processed using MediaPipe to detect and track hands.

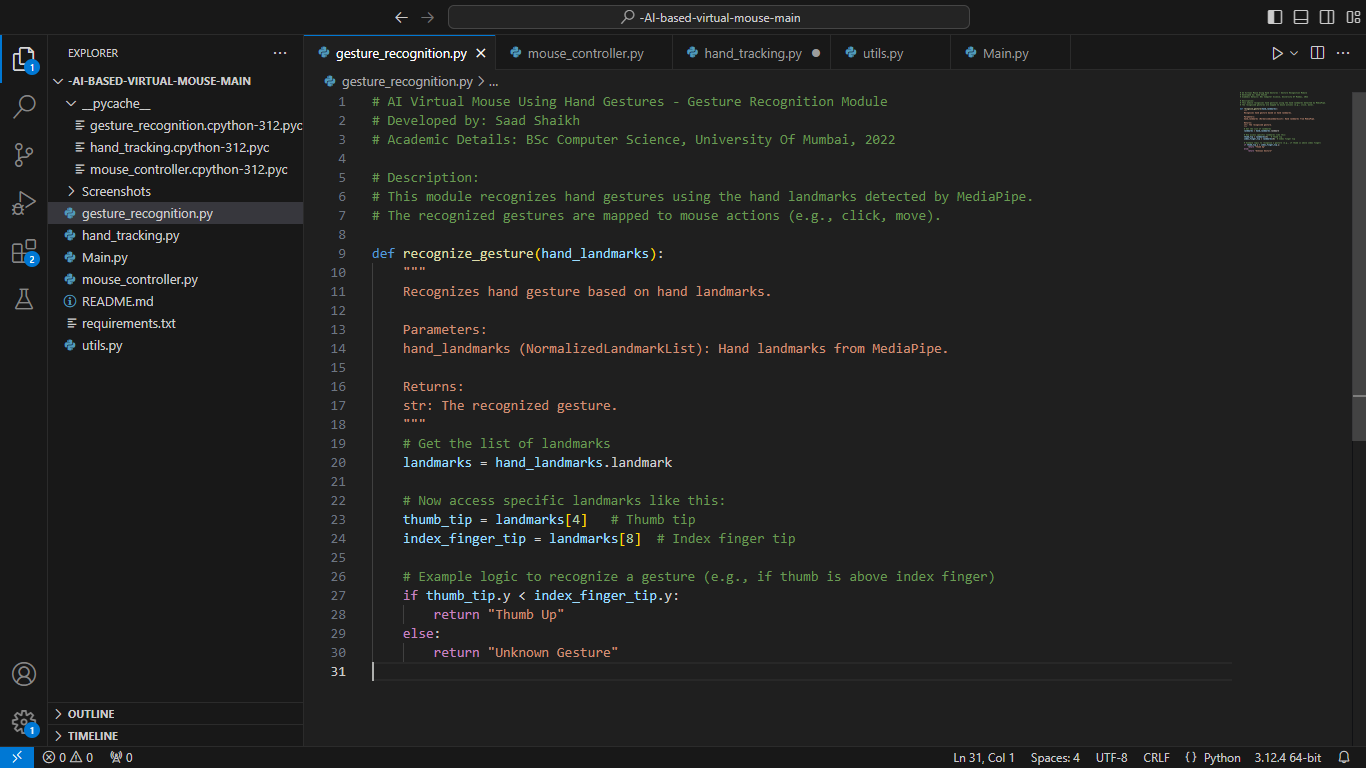

- Gesture Recognition: The hand landmarks provided by MediaPipe are analyzed to identify gestures (e.g., pinching fingers for clicking, moving the index finger to move the cursor).

- Mouse Control: The recognized gestures are used to simulate mouse movements and actions using PyAutoGUI.

- Python 3.x

- A webcam

Screenshots